The Complete Guide to Google Search Console Analysis (2026)

Master Google Search Console with this comprehensive 10,000-word guide. analyze performance data, fix indexing issues, optimize Core Web Vitals, and turn GSC insights into traffic growth.

The Complete Guide to Google Search Console Analysis

Google Search Console is the most powerful free SEO tool available—yet most website owners barely scratch its surface. beginners might check their click counts occasionally but power users leverage GSC to uncover hidden opportunities, diagnose technical issues before they impact rankings, and make data-driven decisions that drive sustainable organic growth. The difference between these two approaches isn't just knowledge—it's results. This comprehensive guide transforms you from a casual GSC user into someone who can extract every insight, fix every issue, and capitalize on every opportunity hiding in your data. What This Guide Covers: This is the most comprehensive Google Search Console resource available. Across 9,500+ words, you'll learn everything from basic setup to advanced analysis techniques. We cover all major GSC reports, explain what your data means, show you how to fix common issues, and teach you to connect metrics to business outcomes. Who This Guide Is For:

- Beginners: Never used Google Search Console? New to SEO entirely? Start with our SEO basics guide first, then return here for GSC mastery.

- Intermediate users: Already familiar with the basics? Jump to advanced filtering, Core Web Vitals, or API access sections.

- Advanced SEOs: Use this as your reference guide and discover techniques you may have missed.

- Business owners: translate GSC data into revenue and growth insights.

How to Use This Guide:

Read straight through for comprehensive mastery, or use the table of contents to jump directly to the sections most relevant to your needs. Each section stands alone while linking to related topics for deeper dives.

Table of Contents

- What Is Google Search Console?

- Setting Up Google Search Console Properly

- Performance Report: Your Most Important Data

- Index Coverage Report: Ensuring Google Sees Your Content

- URL Inspection Tool: explore Individual Pages

- Core Web Vitals Report: Page Experience Signals

- Mobile Usability Report

- Enhancements: Rich Results and Structured Data

- Security & Manual Actions

- Links Report: Understanding Your Backlink Profile

- Experience Report: Aggregated Page Experience

- Sitemaps Section: Keeping Google Updated

- Removals: Temporarily Removing Content from Search

- Crawl Stats Report: Understanding Googlebot Activity

- Settings & Users: Managing Your Property

- Common GSC Pitfalls and How to Avoid Them

- Connecting GSC Data to Business Outcomes

- Advanced GSC Analysis Techniques

- GSC Best Practices Checklist

- Conclusion & Next Steps

The History and Evolution of GSC

Google Search Console hasn't always existed in its current form. Understanding its evolution helps explain some of its quirks and why certain features exist. Google Webmaster Tools Era (2006-2015): Originally launched as Google Sitemaps in 2005 and rebranded to Google Webmaster Tools in 2006, the platform primarily focused on technical webmaster tasks: submitting sitemaps, identifying crawl errors, and monitoring backlinks. The interface was utilitarian and the data was basic. Transition to Search Console (2015): Google rebranded Webmaster Tools to Search Console in 2015, signaling a shift from purely technical tool to a broader search performance platform. This change acknowledged that SEO had evolved beyond webmasters—marketers, business owners, and content creators needed search data too. Key Feature Additions Over Time:

- 2016: Performance Report introduced

- 2018: New GSC interface rolled out, major UX overhaul

- 2019: Domain properties introduced via DNS verification

- 2020: Core Web Vitals report added as page experience became a ranking signal

- 2021: Page Experience report introduced

- 2024: INP replaced FID (First Input Delay) as a Core Web Vital

- 2025-2026: Enhanced mobile-first indexing reporting and improved indexing transparency Mobile-First Indexing Changes: Perhaps the most significant evolution came with Google's shift to mobile-first indexing (fully rolled out by 2021). GSC adapted to show mobile crawl data as primary, reflecting how Google now predominantly uses the mobile version of your content for indexing and ranking. This fundamentally changed how SEOs needed to use GSC—desktop data became secondary.

GSC vs Google Analytics: Understanding the Difference

One of the most common points of confusion: "Why doesn't my GSC click data match my Google Analytics sessions?" These tools measure fundamentally different things: Google Search Console Measures:

- Search appearances: How many times your site appeared in search results (impressions)

- Search clicks: Clicks from Google Search to your site

- Pre-visit data: Queries, positions, CTR before users arrive

- Google's perspective: How Google sees and crawls your site

- Technical health: Indexing status, crawl errors, mobile usability Google Analytics Measures:

- On-site behavior: What visitors do after arriving

- All traffic sources: Organic, paid, social, direct, referral

- User engagement: Time on site, pages per session, conversions

- User perspective: How real humans interact with your content

- Business outcomes: Goals, ecommerce transactions, conversion funnels Why the Numbers Don't Match:

- GSC counts clicks; GA counts sessions: One click can create multiple sessions (user leaves and returns)

- Tracking limitations: Ad blockers affect GA but not GSC

- Data processing differences: Different attribution models and time zones

- Safari ITP and privacy: GA impacted more by privacy features

- Bot filtering: GA filters bots; GSC shows actual search clicks including some bots When to Use Which Tool: Use Google Search Console when you need to:

- Identify keyword opportunities

- Diagnose technical SEO issues

- Understand search visibility and performance

- Monitor indexing status

- Track Core Web Vitals and mobile usability

- Find which pages appear in search Use Google Analytics when you need to:

- Understand user behavior on-site

- Track conversions and revenue

- Analyze traffic sources beyond Google Search

- Measure engagement metrics

- Build customer journey funnels How They Complement Each Other: The most insights come from using both together:

- GSC shows a query driving impressions but low clicks → Check GA to see if the landing page has high bounce rate

- GA shows organic traffic dropped → Check GSC to see if rankings or impressions declined

- GSC shows pages getting clicks → Check GA to see if those clicks convert

New to SEO? Learn the fundamentals in our SEO basics guide before diving into GSC analysis.

New to SEO? Learn the fundamentals in our SEO basics guide before diving into GSC analysis.

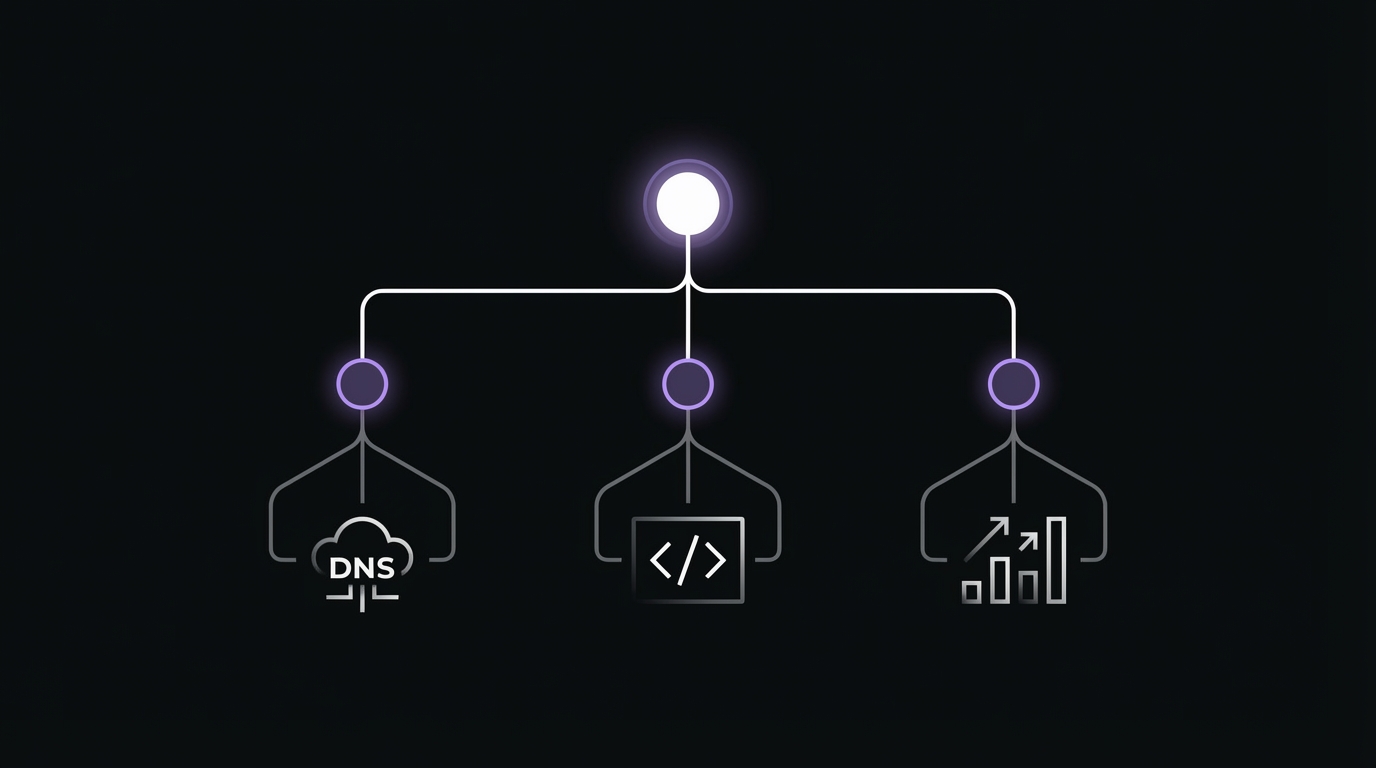

Setting Up Google Search Console Properly

Proper GSC setup is critical—mistakes here mean incomplete data or, worse, no data at all. Many site owners discover months later they've been collecting data for the wrong property variation or missing subdomains entirely. For a complete, step-by-step setup tutorial, see our dedicated guide: How to Set Up Google Search Console for Accurate Data Collection. The overview below covers key concepts, but the full guide includes troubleshooting, verification method comparisons, and post-setup configuration.

Verification Methods

Google offers five verification methods, each with distinct advantages and ideal use cases. Choosing the right one prevents future headaches.

HTML File Upload

How it works: Download a unique HTML file from GSC and upload it to your website's root directory. Pros:

- Simple, straightforward process

- No code modifications needed

- One-time setup Cons:

- Requires FTP or hosting panel access

- File can be accidentally deleted during site migrations

- Doesn't verify if file is removed Best for: Static sites, users comfortable with file management, developers with hosting access. Step-by-step:

- In GSC, select HTML file verification method

- Download the verification file

- Upload to your root directory

- Verify file loads in browser

- Return to GSC and click "Verify"

HTML Meta Tag

How it works: Add a meta tag to your homepage's <head> section.

Pros:

- CMS-friendly

- No file management required

- Easy to implement via plugins or theme editors Cons:

- Must remain on homepage permanently

- Can be accidentally removed during theme updates

- Only works if properly placed in

<head>Best for: WordPress sites, CMS platforms, non-technical users, sites using tag managers. Step-by-step:

- Copy the meta tag from GSC

- Access your site's header:

- WordPress: Appearance → Theme Editor or SEO plugin settings

- Shopify: Online Store → Themes → Edit Code

- Other CMS: Header injection or tag manager

- Paste tag inside

<head>section of homepage - Save and verify tag appears in page source

- Return to GSC and click "Verify" [Screenshot: Meta tag in GSC interface]

Google Analytics Verification

How it works: If Google Analytics tracking code is already on your site and you have Admin access to the GA property, GSC can verify through GA. Pros:

- Instant verification if GA is already set up

- No additional code needed

- Automatic if prerequisites are met Cons:

- Requires GA tracking code on all pages

- Must have Admin access to GA property

- Verification tied to GA property Best for: Sites already using Google Analytics, quick verification, connecting GSC and GA data. Requirements:

- Google Analytics tracking code installed site-wide

- Admin role in Google Analytics property

- Same Google account for GSC and GA [Screenshot: Google Analytics verification option]

Google Tag Manager Verification

How it works: Verifies through your existing GTM container. Pros:

- Works if GTM is already implemented

- Container-level verification

- No additional tags needed Cons:

- Requires published GTM container

- Must have Publish permission in GTM

- Less common than GA method Best for: Sites using GTM, advanced implementations, marketing teams already managing tags via GTM. Requirements:

- GTM container published and live on site

- Publish permission in Google Tag Manager account

- Container loading on all pages [Screenshot: GTM verification method selection]

DNS Verification

How it works: Add a TXT record to your domain's DNS settings at your domain registrar. Pros:

- Verifies entire domain including all subdomains

- Most robust method—never affected by site changes

- Required for domain properties

- Professional standard

- Persists through site migrations, platform changes, redesigns Cons:

- Requires DNS access

- DNS propagation can take 5 minutes to 48 hours (usually <1 hour)

- Slightly more technical than other methods Best for: Domain properties (required), agencies managing multiple sites, professional implementations, long-term stability. Step-by-step:

- In GSC, select DNS verification method

- Copy the TXT record value

- Log in to your domain registrar

- Navigate to DNS settings/DNS management

- Add new TXT record:

- Host/Name: @

- Type: TXT

- Value: Paste the verification string from GSC

- TTL: Automatic or 3600

- Save DNS record

- Wait for DNS propagation

- Return to GSC and click "Verify" Troubleshooting DNS verification:

- If verification fails, wait longer

- Ensure no extra spaces in TXT record value

- Verify you added to root domain, not subdomain

- Use DNS checker tool: mxtoolbox.com/TXTLookup.aspx [Screenshot: DNS TXT record in GSC] [Screenshot: Example DNS settings panel showing TXT record]

Which Verification Method Should You Choose?

Decision flowchart:

- Setting up a domain property? → Use DNS verification (required)

- Already using Google Analytics or GTM? → Use GA or GTM verification (fastest)

- Have FTP/hosting access? → Use HTML file upload

- Using a CMS like WordPress? → Use HTML meta tag (easiest)

- Want maximum stability? → Use DNS verification

Property Types: Domain vs URL Prefix

This is a key decisions in GSC setup, yet many users don't understand the difference.

Domain Property (sc-domain:example.com)

What it includes:

- All subdomains: www, blog, shop, help, m, etc.

- All protocols: http AND https

- All paths on all subdomains

Example: A domain property for

example.comincludes: https://www.example.comhttp://example.comhttps://blog.example.comhttps://shop.example.com/productshttps://m.example.comVerification: DNS only (TXT record required) Advantages:- Complete data in one place

- Automatically includes new subdomains

- Simplified management

- Reflects how most sites operate

- Recommended by Google as modern standard

URL Prefix Property (e.g., https://www.example.com)

What it includes:

- Specific protocol only

- Specific subdomain only

- All paths under that exact URL prefix

Example: A URL prefix property for

https://www.example.comincludes: https://www.example.com/blog/posthttps://www.example.com/productsBut does NOT include:http://www.example.com(different protocol)https://example.com(different subdomain)https://blog.example.com(different subdomain) Verification: Any method Advantages:- Granular data separation

- Specific subdomain tracking

- Works if you don't have DNS access [Diagram: Domain vs URL prefix coverage visualization]

Which Property Type Should You Use?

Use Domain Property if:

- You want complete data in one view

- You have subdomains

- You're setting up GSC for the first time

- You have DNS access

- You want simplicity and completeness Use URL Prefix Property if:

- You need to separate http vs https data (rare need)

- You need to isolate www vs non-www data (rare need)

- You manage subdomains as completely independent sites

- You don't have DNS access

- You need to track a specific subdomain separately Recommendation: Domain property is the modern standard. Use this unless you have a specific, compelling reason not to.

Can You Have Both?

Yes, and this is common during transitions or for specific troubleshooting:

- Domain property for overall performance and complete picture

- URL prefix properties for specific subdomain deep-dives

- Data is not duplicated—they're different views of the same underlying data Example property structure for a site with blog subdomain:

sc-domain:example.com(domain property - main view)https://www.example.comhttps://blog.example.comThis gives aggregate data in the domain property while allowing detailed analysis of specific subdomains. [Screenshot: Multiple properties for the same site in property selector]

Initial Setup Checklist

After verifying your property, complete these critical configuration steps:

1. Submit Your Sitemap

Sitemaps tell Google which pages you want indexed and how they're organized. Steps:

- Navigate to Sitemaps section in left sidebar

- Enter your sitemap URL:

- WordPress: Usually

/sitemap.xmlor/sitemap_index.xml - Shopify:

/sitemap.xml - Custom sites: Check your CMS or consult developer

- Click Submit

- Wait for processing (can take hours to days)

- Check for "Success" status Common sitemap locations:

/sitemap.xml/sitemap_index.xml/sitemap.xml.gz/wp-sitemap.xml(WordPress 5.5+) [Screenshot: Sitemap submission and success confirmation]

2. Set Up Email Notifications

Don't miss critical alerts about your site's search presence. Steps:

- Go to Settings (gear icon)

- Find "Email notifications" section

- Configure alert preferences:

- Recommended: Enable all alerts

- Critical: Manual actions, security issues, indexing problems

- Add multiple email addresses for redundancy What you'll be notified about:

- Manual actions (penalties)

- Security issues (hacking, malware)

- Critical indexing errors

- Core Web Vitals degradation

- Mobile usability issues

3. Connect to Google Analytics

Linking GSC and GA4 richer analysis and cross-platform insights. Steps:

- In GSC, go to Settings → Associations

- Click "Associate" next to Google Analytics

- Select your GA4 property

- Confirm association Benefits:

- View GSC data inside GA4 reports

- Correlate search queries with on-site behavior

- Build unified dashboards

- Better attribution modeling

4. Add Team Members

Give your team appropriate access without sharing your Google account. Permission levels:

- Owner: Full control, can add/remove users, delete property

- Full User: View all data, take most actions (cannot manage users)

- Restricted User: View most data, limited actions Steps:

- Go to Settings → Users and permissions

- Click "Add user"

- Enter email address

- Select permission level

- User receives email invitation Best practices:

- Have at least 2 owners (prevents lockout)

- Agencies: Use Full User, not Owner

- Content team: Restricted User is sufficient

- Document who has access and why [Screenshot: Settings panel with key configurations highlighted]

5. Set International Targeting (if applicable)

Only relevant for URL prefix properties targeting specific countries. When to use:

- URL prefix property only

- Site targets specific country

- Using ccTLD isn't feasible Steps:

- Go to Settings (in legacy section)

- Find International Targeting

- Select target country

Managing Multiple Properties

Common scenarios requiring multiple GSC properties: Scenario 1: Subdomains

- Solution: Domain property covers everything automatically

- Alternative: Separate URL prefix for each subdomain if managed by different teams Scenario 2: International Sites (Different Domains)

example.com(US)example.co.uk(UK)example.de(Germany)- Solution: Separate domain property for each ccTLD Scenario 3: Migration (HTTP to HTTPS)

- Setup: Add HTTPS property before migration

- During migration: Monitor both properties

- After migration: HTTPS property becomes primary, keep HTTP for historical reference Scenario 4: Subdirectories by Market

example.com/us/example.com/uk/example.com/de/- Solution: One domain property, use filters to analyze each market [Screenshot: Property selector showing organized multiple properties] [Link to: How to Set Up Google Search Console for Accurate Data Collection (Cluster #1)]

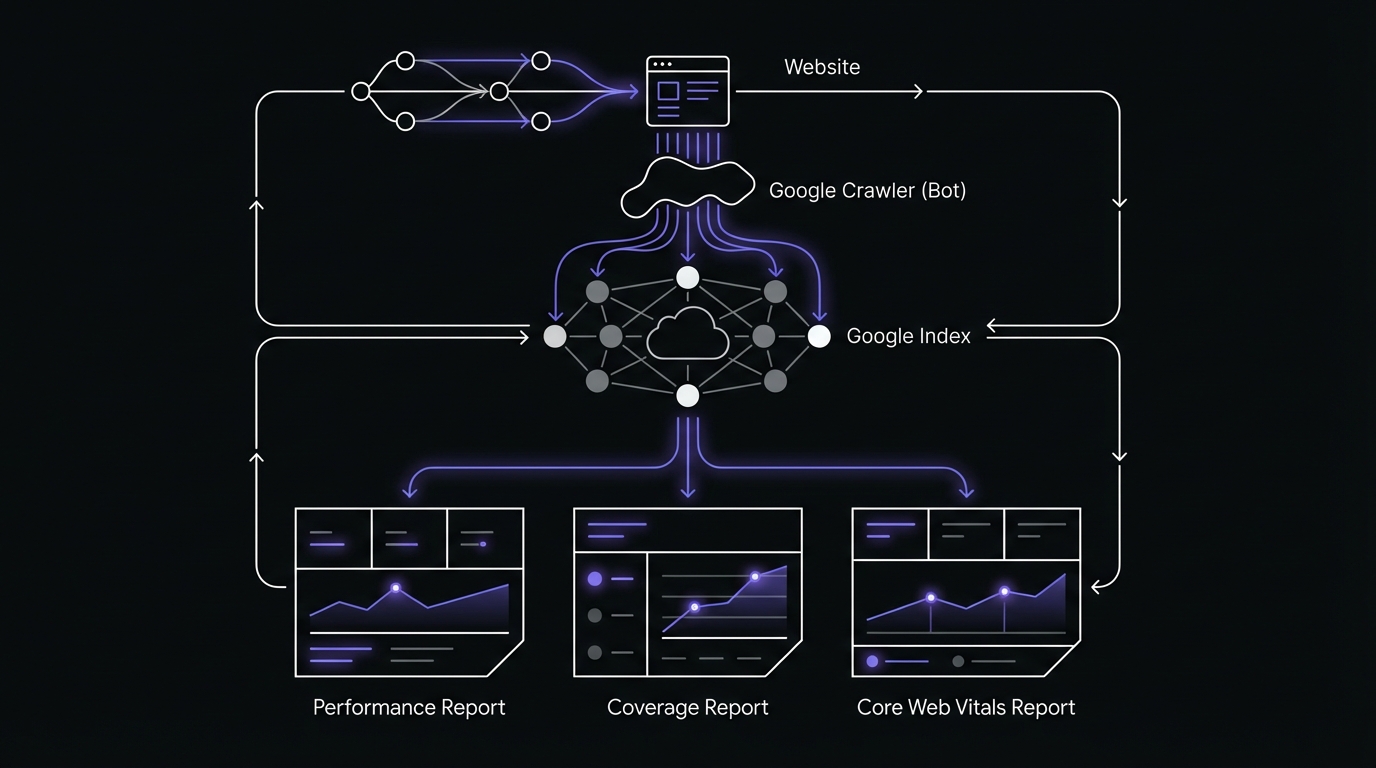

Performance Report: Your Most Important Data

The Performance Report is where you'll spend 80% of your GSC time. It shows exactly how your site performs in Google Search: which queries trigger your pages, how often you appear, how often users click, and what positions you rank at. guide guides:

- How to Read Your GSC Performance Report (Beginner's Guide) - Start here if you're new to the Performance Report

- Understanding the GSC Performance Report: What Your Data Is Really Telling You - interpret patterns and trends

- GSC Filters and Comparisons: Complete Tutorial - Master advanced filtering techniques

Understanding the Four Core Metrics

Clicks

What it measures: The number of times a user clicked through to your website from a Google Search result. What counts as a click:

- User clicks your search result

- User clicks "More results" on a featured snippet then clicks your result

- Clicks from Google Images What doesn't count:

- User views result but doesn't click

- User clicks then immediately backs out

- Clicks from Google Discover, News (separate reports) not identical to GA sessions but clicks represent users actively choosing your site.

Impressions

What it measures: How many times your site appeared in search results, regardless of whether it was scrolled into view. When Google counts an impression:

- Your result appears in search results (even below the fold)

- User opens search results page containing your link

- Pagination: Each page of results counts separately What doesn't count:

- Result exists but user doesn't reach that page of results

- Result filtered out by personalization High impressions with low clicks indicate CTR optimization opportunities. Low impressions mean ranking or targeting issues. To understand the relationship between these metrics and what it reveals about your content, see our analysis of GSC impressions vs clicks and how to read the gap.

CTR (Click-Through Rate)

What it measures: Percentage of impressions that resulted in clicks.

Calculation: (Clicks / Impressions) × 100

Example: 100 clicks from 2,000 impressions = 5% CTR

What's "good" CTR:

- Position 1: 25-35% (broad queries), 40-60% (branded queries)

- Position 2-3: 10-20%

- Position 4-5: 7-12%

- Position 6-10: 3-8%

- Position 11-20: 1-3% Factors affecting CTR:

- Search position

- Title tag quality

- Meta description appeal

- Rich results (FAQ, ratings, etc.)

- Brand recognition

- Query intent match

- Competition in SERP Low CTR at high positions means optimization opportunity. High CTR at low positions suggests strong title/description but need for better rankings. For a comprehensive analysis of what your CTR data reveals and how to act on it, see our guide on how to interpret GSC CTR data.

Average Position

The most misunderstood metric in GSC. What it measures: The average position your site appeared at across all impressions for a query. How it's calculated:

- Not your current ranking

- Average across all impressions in the date range

- Weighted by impression volume

- Can be decimal (e.g., 4.7) Example calculation:

- Query "seo tools" had:

- 100 impressions at position 5

- 50 impressions at position 8

- Average position =

(100 × 5 + 50 × 8) / 150 = 5.67Why it fluctuates: - Rankings change throughout the day

- Personalization shows different results to different users

- Different result types

- Geographic variations

- Featured snippet appearances

- SERP features pushing results down What it tells you:

- Approximate visibility range

- Trend direction

- Comparative performance across queries

- Pages close to page 1 What it doesn't tell you:

- Your exact ranking right now

- Ranking for specific user in specific location

- Competitive positioning Common misinterpretation: "My average position is 7, but when I search, I'm in position 5!"

- Explanation: Your personal search shows one snapshot. Average position reflects thousands of impressions across different users, locations, times, and personalization factors. For a explore what average position means and how to use it effectively, read our complete guide on position tracking and average position explained. [Screenshot: Performance Report dashboard with all four metrics] [Chart: Average CTR by position benchmark data]

Reading the Performance Chart

The line graph at the top of the Performance Report reveals traffic patterns and trends.

Trend Identification Techniques

Upward trend: Consistent growth week-over-week

- Good signs: Seasonal content gaining traction, new content performing, technical improvements paying off

- Action: Identify what's working and double down Downward trend: Consistent decline

- Potential causes: Algorithm update, competitor gains, technical issues, seasonality

- Action: Investigate index coverage, check for penalties, analyze specific queries dropping Plateau: Flat performance

- Indication: Hit ceiling for current strategy

- Action: Expand keyword targeting, improve content quality, build links Spiky pattern: Erratic ups and downs

- Common causes: News/trending topics, manual indexing issues, crawl budget problems

- Action: Investigate specific dates with spikes/drops Seasonal pattern: Predictable cyclical changes

- Examples: Tax prep (January-April peak), Holiday gifts

- Action: Prepare content in advance of seasonal peaks [Screenshot: Performance chart showing various trend patterns]

Comparing Date Ranges Effectively

Year-over-Year (YoY) comparison:

- Compare last 28 days to same 28 days last year

- Accounts for seasonality

- Shows true growth independent of seasonal fluctuations

- Best for: Understanding genuine growth trends Month-over-Month (MoM) comparison:

- Compare this month to last month

- Shows recent changes quickly

- Affected by seasonality

- Best for: Detecting recent issues or wins Week-over-Week (WoW) comparison:

- Compare last 7 days to previous 7 days

- Detects immediate changes

- Highly volatile

- Best for: Tracking recent optimizations or diagnosing sudden drops Custom comparisons:

- Compare pre/post algorithm update

- Compare before/after site migration

- Compare before/after content refresh How to compare in GSC:

- Select date range (e.g., Last 28 days)

- Click "Compare" tab

- Choose comparison type or custom dates

- View metrics with +/- change indicators [Screenshot: Date comparison view showing year-over-year analysis]

The Five Performance Report Views

Queries View

Shows search terms that triggered your site in results. What you can find:

- Keywords you rank for (known and unknown)

- Search volume proxies

- Ranking opportunities

- Branded vs non-branded query performance

- Misspellings and variations driving traffic How to use Queries view: Find opportunity queries:

- Filter by position 3-10

- Sort by impressions (descending)

- Look for high-impression queries with low CTR

- Optimize title tags and meta descriptions for these queries Identify content gaps:

- Sort by impressions

- Find queries where you rank but don't have dedicated content

- Create targeted content for high-volume queries Discover new keywords:

- Export query data

- Identify patterns in queries you didn't target

- Expand content to capture more variations Track branded search:

- Filter queries containing brand name

- Monitor branded search growth as brand awareness metric

- Protect branded SERPs from competitors Find low-hanging fruit:

- Filter positions 11-20 (page 2)

- Sort by impressions

- Optimize pages ranking on page 2 to reach page 1 For a complete walkthrough of finding and prioritizing keyword opportunities using the Queries report, see our detailed guide on the GSC Queries report and how to find your biggest opportunities. [Screenshot: Queries report showing opportunity queries highlighted] [Link to: GSC Queries Report: How to Find Your Biggest Opportunities (Cluster #6)]

Pages View

Shows individual URLs that appeared in search results. What you can find:

- Top-performing content

- Underperforming pages that need optimization

- Pages losing traffic

- Pages gaining traffic

- Cannibalization issues How to use Pages view: Identify top performers:

- Sort by clicks

- Analyze what makes these pages successful

- Replicate success patterns in other content Find underperformers:

- Sort by impressions

- Find pages with high impressions but low clicks

- Optimize titles and meta descriptions Detect declining pages:

- Compare to previous period

- Sort by click change

- Investigate pages with significant drops Keyword cannibalization check:

- Click on a page

- Go to Queries tab

- See what queries drive this page

- Check if other pages target the same queries For a systematic approach to identifying your best and worst performing pages and what to do about them, read our comprehensive Pages report analysis guide. Content audit prioritization:

- Export pages data

- Identify pages with impressions but zero clicks

- Prioritize for optimization or consolidation [Screenshot: Pages report showing top performers and underperformers] [Link to: Pages Report Analysis: Identifying Your Best and Worst Performers (Cluster #7)]

Countries View

Shows search performance by country/region. What you can find:

- Geographic distribution of search traffic

- International expansion opportunities

- Unexpected country performance

- Hreflang issues How to use Countries view: Identify expansion opportunities:

- Sort by impressions

- Find countries with high impressions but low clicks

- Consider creating localized content for these markets Verify international targeting:

- Check if primary country aligns with target market

- If wrong country dominates, review hreflang tags

- Ensure content language matches intent Detect regional trends:

- Compare performance across countries

- Identify where your content resonates most

- Tailor content strategy by market For international sites or those looking to expand into new markets, our guide on analyzing the GSC Countries report for international performance insights provides detailed strategies for geographic optimization. [Screenshot: Countries report showing geographic performance]

Devices View

Shows performance segmented by device type: Desktop, Mobile, Tablet. What you can find:

- Mobile vs desktop performance differences

- Mobile-first indexing impact

- Device-specific CTR patterns

- Mobile usability issues indicated by low mobile CTR How to use Devices view: Mobile-first indexing check:

- Compare clicks and impressions across devices

- Mobile should show comparable or higher impressions

- If mobile significantly lags, investigate mobile usability issues Device-specific CTR analysis:

- Compare CTR across devices

- Lower mobile CTR may indicate title tags cut off on mobile

- Higher desktop CTR for certain queries may indicate desktop-preferred intent Optimization prioritization:

- Identify which device drives most traffic

- Optimize experience for dominant device first

- Ensure mobile parity (mobile-first era requirement) Given that Google uses mobile-first indexing, understanding device performance differences is critical. Our detailed Devices report analysis guide shows you how to identify and fix mobile vs desktop performance gaps. [Screenshot: Devices comparison showing mobile vs desktop performance]

Search Appearance View

Shows performance by rich result type (if you have structured data). Rich result types:

- Regular results (standard blue links)

- AMP articles

- FAQ

- HowTo

- Product (with ratings/price)

- Recipe

- Event

- Job posting

- Video What you can find:

- Impact of rich results on CTR

- Which structured data implementations are working

- Opportunities to add structured data How to use Search Appearance: Measure rich result impact:

- Compare CTR of FAQ results vs regular results

- Quantify CTR lift from structured data

- Calculate ROI of schema implementation Identify opportunities:

- See which result types you don't have

- Identify content eligible for rich results

- Implement appropriate schema markup Rich results can dramatically improve your click-through rates and visibility. For a complete analysis of how different rich result types perform and which ones to prioritize, see our guide on understanding Search Appearance and rich results impact. [Screenshot: Search Appearance showing FAQ rich results vs regular results]

Advanced Filtering Techniques

Filters transform GSC from basic reporting tool to powerful analysis platform.

Query Filtering Strategies

Filter by query type:

- Branded: Queries containing "Company Name" → Shows brand awareness

- Non-branded: Queries NOT containing "Company Name" → Shows SEO effectiveness

- Question queries: Queries containing "how" or "what" or "why" → Shows informational content performance

- Commercial intent: Queries containing "buy" or "best" or "review" → Shows bottom-funnel performance Example filter: Find non-branded question queries

- Query contains "how"

- Query doesn't contain "Company Name"

- Sort by impressions

- Reveals informational content opportunities

URL Filtering with Regex

Regular expressions (regex) enable sophisticated page filtering. Common regex patterns: Filter blog posts:

- Custom filter: Page URL

contains regex/blog/Filter product pages: - Custom filter: Page URL

contains regex/product/Filter by category: - Custom filter: Page URL

contains regex/category/[^/]+/?$Exclude parameters: - Custom filter: Page URL

doesn't contain regex\?Multiple subdirectories: - Custom filter: Page URL

contains regex/(blog|news|articles)/[Screenshot: Advanced filter showing regex URL filtering]

Combining Filters for Deep Analysis

The real power comes from combining multiple filters. Example 1: Non-branded blog performance

- Page: URL contains

/blog/ - Query: Doesn't contain "Brand Name"

- Result: See how blog posts perform for non-branded search Example 2: Mobile opportunity queries

- Device: Mobile

- Position: 3-10

- Sort by: Impressions

- Result: High-impression mobile queries where you're close to page 1 Example 3: Question content by country

- Query: Contains "how to"

- Country: United States

- Page: URL contains

/guides/ - Result: How US users engage with tutorial content Example 4: Low-CTR high-rankings

- Position: 1-3

- CTR: < 15%

- Sort by: Impressions

- Result: Pages ranking well but not getting clicks

Custom vs Type Filters

Type filters:

- Built-in GSC filters for common attributes

- Options: Contains, Doesn't contain, Exactly matches, Custom (regex) Inclusion filters:

- Show only data matching criteria

- Use for: Focusing on specific segment Exclusion filters:

- Remove data matching criteria

- Use for: Filtering out noise

Common Performance Report Mistakes

Mistake #1: Only Looking at Total Clicks

The problem: Total clicks are a vanity metric without context. What to do instead:

- Analyze click trends over time

- Segment by query type

- Compare clicks to impressions (CTR context)

- Drill into pages and queries driving clicks

Mistake #2: Ignoring Impression Data

The problem: Impressions show visibility—the full picture of your search presence. Why impressions matter:

- High impressions + low clicks = CTR optimization opportunity

- Declining impressions = ranking problem

- Low impressions = visibility/targeting problem

- Impressions approximate search volume What to do:

- Always view clicks AND impressions together

- Sort by impressions to find opportunity

- Track impression trends as leading indicator

Mistake #3: Not Using Date Comparisons

The problem: Absolute numbers lack context. Is 1,000 clicks good? Depends on whether it's up or down. What to do instead:

- Always compare to previous period

- Use year-over-year to account for seasonality

- Track week-over-week to catch issues early

Mistake #4: Misunderstanding Average Position

The problem: Treating average position as current ranking. Common misconceptions:

- "I'm position 7.2"

- "My position dropped 0.3 points—disaster!"

- "When I search I'm #5, not #7!" What to do instead:

- Use average position for trend direction

- Don't obsess over decimal changes

- Use dedicated rank tracking tools for precise rankings

- Focus on position ranges, not exact numbers

Mistake #5: Not Filtering Data

The problem: Generic aggregated data obscures insights. What to do instead:

- Filter to analyze specific segments

- Separate branded and non-branded performance

- Analyze device performance separately

- Filter by content type

Mistake #6: Forgetting About Data Sampling

The problem: GSC has limitations that affect what you see. Limitations to remember:

- 1,000 row limit in UI (use API for more)

- Anonymous queries

- 16-month data retention What to do instead:

- Use filters to access different 1,000-row segments

- Export data regularly for historical archive

- Use GSC API for sites with >1,000 queries/pages

- Accept you won't see 100% of query data [Link to: Understanding GSC's Data Sampling and Limitations (Cluster #2)]

Mistake #7: Not Acting on Data

The biggest mistake of all. The problem: Viewing reports without implementing insights is wasted effort. What to do instead:

- Create action items from every GSC session

- Prioritize based on potential impact

- Track optimizations and measure results

- Build a regular GSC workflow with outcomes Example action workflow:

- Weekly: Review performance trends → Flag anomalies

- Bi-weekly: Query analysis → Identify 5 optimization opportunities

- Monthly: Page analysis → Content audit priorities

- Quarterly: Comprehensive audit → Strategic adjustments [Infographic: 7 Common GSC Mistakes checklist] [Link to: How to Read Your GSC Performance Report (Beginner's Guide) (Cluster #4)] [Link to: Understanding the GSC Performance Report: What Your Data Is Really Telling You (Cluster #5)]

Index Coverage Report: Ensuring Google Sees Your Content

You can create perfect content, but if Google doesn't index it, it won't rank. The Index Coverage Report shows exactly which pages Google has indexed, which it hasn't, and why. For a comprehensive walkthrough of diagnosing and fixing indexing issues, see our complete guide to the GSC Index Coverage Report.

The Four Status Categories

Valid: Pages Successfully Indexed

What it means: Google crawled these pages, found no significant issues, and included them in the search index. Subcategories:

- Submitted and indexed: Pages in your sitemap that were successfully indexed

- Indexed, not submitted in sitemap: Pages Google discovered and indexed without sitemap submission What to check:

- Ensure important pages are in "Submitted and indexed"

- Review "Indexed, not submitted" for pages that should be in sitemap or shouldn't be indexed

Valid with Warnings: Indexed but with Issues

What it means: Pages are indexed but have non-critical problems that should be addressed. Common warnings:

- Indexed though blocked by robots.txt: Page is indexed but robots.txt blocks crawling

- Indexed, though blocked by robots.txt: Similar issue, potential future deindexing risk What to do:

- Review robots.txt to ensure you're not accidentally blocking important pages

- Fix blocking if unintentional

- If intentional, consider noindex instead

Error: Pages Not Indexed Due to Errors

What it means: Critical issues preventing indexing. These pages won't appear in search results. Common errors:

- Server error (5xx): Website returned server error when Google tried to crawl

- Redirect error: Page has redirect issue

- 404 not found: Page doesn't exist or returns 404

- Blocked by robots.txt: Robots.txt explicitly blocks Googlebot

- Soft 404: Page returns 200 status but appears to be an error page

- Submitted URL marked 'noindex': Sitemap includes page with noindex tag

- Page with redirect: Page redirects to another URL

- Submitted URL not found (404): Sitemap includes URL that returns 404 Priority: Fix these immediately—they represent lost ranking opportunities. [Screenshot: Index Coverage dashboard showing status categories]

Excluded: Pages Intentionally or Unintentionally Not Indexed

What it means: Google found these pages but chose not to index them, or you explicitly told Google not to. Common exclusions: Intentional exclusions (usually fine):

- Excluded by 'noindex' tag: Page has noindex directive

- Blocked by robots.txt: You're intentionally blocking crawling

- Removed by URL removal tool: You requested temporary removal Unintentional exclusions (need attention):

- Crawled - currently not indexed: Google crawled but decided not to index

- Discovered - currently not crawled: Google found the URL but hasn't crawled it yet

- Duplicate, Google chose different canonical: Page is duplicate, Google indexed a different version

- Alternate page with proper canonical tag: Page properly points to canonical version

- Duplicate without user-selected canonical: Google detected duplicate and chose canonical for you

- Duplicate, submitted URL not selected as canonical: Sitemap includes non-canonical version

- Not found (404): Historical; page used to exist Most concerning: "Crawled - currently not indexed"

- Indicates Google doesn't think page is valuable enough to index

- Common on low-quality, thin, or duplicate content

- Also affects sites with crawl budget constraints [Screenshot: Excluded section showing "Crawled - currently not indexed" detail]

Common Indexing Issues

Server Errors (5xx)

What causes this:

- Website server is down or slow

- Hosting resource limits exceeded

- Database connection issues

- Plugin/module conflicts causing crashes How to fix:

- Check server logs for actual errors

- Test affected URLs directly

- Contact hosting if server problems persist

- Optimize database queries if timeout issues

- Request validation after fixing

Redirect Errors

What causes this:

- Redirect chains

- Redirect loops (A → B → A)

- Redirects to URLs that also redirect

- Too many redirects (>5 in chain) How to fix:

- Identify redirect chains with Screaming Frog or similar tool

- Update redirects to point directly to final destination

- Fix any redirect loops

- Update internal links to avoid redirects entirely

- Request validation

Soft 404s

What causes this:

- Page returns 200 status code but contains no content or error message

- "Product not found" pages returning 200 instead of 404

- Search results pages with no results returning 200

- Thin content pages Google interprets as errors How to fix:

- Ensure actual 404 pages return proper 404 status code

- Add substantial content to thin pages

- Return 404 for truly non-existent content

- Return 410 for permanently removed content

Duplicate Content Issues

What causes this:

- Multiple URLs with identical/similar content

- www vs non-www both accessible

- http and https both accessible

- URL parameters creating duplicates (?id=123)

- Pagination without proper rel=prev/next

- Print versions of pages

- AMP and canonical version conflicts How to fix:

- Implement proper canonical tags pointing to preferred version

- Use 301 redirects to consolidate duplicate URLs

- Configure URL parameters in GSC (legacy tool)

- Block parameter URLs in robots.txt if appropriate

- Ensure www/non-www redirect to one version

- Ensure http redirects to https [Screenshot: Duplicate content issue detail with canonical recommendation]

Crawled But Not Indexed

The most frustrating issue. What causes this:

- Low content quality

- Duplicate or near-duplicate content

- Orphaned pages

- Low crawl priority

- Crawl budget limitations (large sites)

- Recently published content (be patient)

- Low page authority (new site or page) How to fix:

- Improve content quality:

- Add depth and value

- Make content unique

- Address search intent comprehensively

- Build internal links:

- Link from high-authority pages

- Add to navigation if important

- Include in related content sections

- Improve page authority:

- Earn external links

- Update content regularly

- Improve user engagement signals

- Reduce crawl depth:

- Flatten site architecture

- Improve internal linking

- Be patient:

- New content can take weeks to index

- Request indexing via URL Inspection tool

- But don't spam requests When to worry:

- Important pages not indexed after 4+ weeks

- Commercial pages (products, services) not indexing

- Well-linked pages still excluded When it's okay:

- Low-value pages

- Truly duplicate content you don't need indexed

- Very new content (<2 weeks old) [Screenshot: "Crawled - currently not indexed" status with examples]

Prioritizing Index Issues

Not all indexing issues are equally important. Focus on high-impact fixes first. Priority 1 (Fix immediately):

- Server errors on important pages

- Homepage or key landing pages not indexed

- Product/service pages showing errors

- Recent significant increase in errors Priority 2 (Fix this week):

- Redirect errors on linked pages

- Soft 404s on pages that should exist

- Crawled but not indexed on important content

- Submitted URLs marked noindex (unintentionally) Priority 3 (Fix this month):

- Minor duplicate content issues

- Excluded low-priority pages

- Old 404 errors from URLs that never should have existed When "Excluded" is good:

- Thank you pages

- Internal search results pages

- Cart and checkout pages

- Paginated page 2+

- Tag and category pages with thin content

- Admin and account pages [Table: Index issues priority matrix showing severity vs frequency]

The Validation Process

After fixing indexing issues, request validation to tell Google you've resolved them. How validation works:

- You fix the underlying issue

- You click "Validate Fix" in GSC

- Google adds affected URLs to validation queue

- Google recrawls sample URLs immediately

- If sample passes, Google recrawls remaining URLs over time

- Status updates as validation progresses Validation states:

- Not started: You haven't requested validation

- Started: Validation in progress

- Passed: All sampled URLs passed validation

- Failed: Sample URLs still have issues How long validation takes:

- Sample check: Few days

- Complete validation: 2-4 weeks typically

- Large sets: Can take several weeks What if validation fails:

- Review specific URLs that failed

- Test URLs manually to confirm fix

- Verify fix is deployed correctly

- Wait a few days and request validation again

- Check for new issues introduced by fix Pro tips:

- Don't request validation until you've fixed the issue

- One validation request per issue is enough (don't spam)

- Track validation progress in validation history

- If validation fails repeatedly, there may be intermittent issues [Screenshot: Validation workflow and progress states] [Flowchart: Decision tree for addressing index issues] [Link to: How to Read GSC Index Coverage Report (Cluster #17)]

URL Inspection Tool: explore Individual Pages

The URL Inspection Tool is your diagnostic microscope for individual pages. the Index Coverage Report shows site-wide patterns but URL Inspection reveals everything Google knows about a specific URL. For a complete walkthrough of using this powerful tool to diagnose page-level issues, see our GSC URL Inspection Tool guide.

Index Status

Is the page in Google's index?

- URL is on Google: Page is indexed and can appear in search results

- URL is not on Google: Page is not indexed (shows reason why) Last crawl date:

- When Googlebot last accessed this page

- If never crawled, shows "Google hasn't crawled this URL yet" Indexing status:

- Allowed or blocked by robots.txt

- Indexing allowed or blocked by noindex

- Canonical URL

Crawl Information

User agent: Which Googlebot crawled this page

- Smartphone Googlebot

- Desktop Googlebot (rare, mostly legacy) Crawl allowed/disallowed:

- Whether robots.txt permits crawling

- Shows specific robots.txt rule if blocked Page fetch status:

- Success: Page loaded successfully

- Failure: Server error, timeout, etc. Indexing allowed:

- Whether meta robots or X-Robots-Tag allows indexing

- Shows noindex directive if present

Page Resources

Whether JavaScript, CSS, and images loaded:

- Success: All resources accessible to Google

- Failures: Blocked resources Coverage report: Details on which resources loaded or failed

Mobile Usability

Mobile-friendly status:

- Page is mobile-friendly

- Page is not mobile-friendly (shows issues) Mobile usability errors:

- Text too small to read

- Clickable elements too close

- Content wider than screen

- Viewport not set

Rich Results Eligibility

Which rich results this page qualifies for:

- FAQ

- HowTo

- Product

- Recipe

- Event

- Job posting

- etc. Structured data detected:

- Valid structured data found

- Errors or warnings in structured data

Canonical URL

Which URL Google considers canonical:

- User-declared canonical (your canonical tag)

- Google-selected canonical **txt

- noindex? Remove noindex tag

- Canonicalized away? Fix canonical implementation

- Crawl error? Fix server issue

- Run live test to confirm fix

- Request indexing

Problem: Rich Results Not Showing

Diagnostic process:

- Inspect URL

- Scroll to "Enhancements" or "Rich results" section

- Check status:

- Eligible: Structured data valid, may show in search (not guaranteed)

- Errors: Structured data has errors, won't show rich results

- Not detected: No structured data found

- Review specific errors

- Fix structured data

- Test live URL to confirm

- Request indexing

- Rich results may take days/weeks to appear even after fix

Problem: Mobile Usability Issues

Diagnostic process:

- Inspect URL

- Review "Mobile usability" section

- Check specific issues:

- Text too small? Increase font size

- Clickable elements too close? Add spacing

- Content wider than screen? Fix responsive design

- Viewport not set? Add viewport meta tag

- Fix issues

- Test live URL to confirm resolved

- Request indexing to update mobile assessment

Problem: Canonical Issues

Diagnostic process:

- Inspect URL

- Review canonical section

- Compare:

- User-declared canonical: What you specified

- Google-selected canonical: What Google uses

- If they differ:

- Google thinks this is a duplicate

- Google selected different URL as canonical

- Review why Google overrode

- Fix:

- Consolidate duplicate content

- Improve canonicalization signals

- Use 301 redirects if appropriate

- Strengthen internal linking to preferred version [Diagram: URL Inspection troubleshooting workflow] [Link to: GSC URL Inspection Tool: Complete Analysis Guide (Cluster #21)]

Core Web Vitals Report: Page Experience Signals

Core Web Vitals measure real user experience on your pages. Google uses these metrics as ranking signals, making them important for UX and SEO. For a detailed breakdown of what each metric means and how to prioritize improvements, see our comprehensive guide on GSC Core Web Vitals interpretation.

Understanding the Three Vitals

LCP (Largest Contentful Paint)

What it measures: How long it takes for the largest content element to load and become visible. Users perceive pages with fast LCP as loading quickly. What counts as "largest":

- Images

- Video thumbnail

- Background images (via CSS)

- Block-level text elements Thresholds:

- Good: ≤ 2.5 seconds

- Needs Improvement: 2.5 - 4.0 seconds

- Poor: > 4.0 seconds How to improve LCP:

- Optimize images:

- Compress images (WebP format)

- Use responsive images (srcset)

- Lazy load offscreen images

- Preload hero images

- Improve server response time:

- Use faster hosting

- Implement server-side caching

- Use CDN

- Optimize database queries

- Eliminate render-blocking resources:

- Defer non-critical JavaScript

- Inline critical CSS

- Minimize CSS

- Optimize web fonts:

- Use font-display: swap

- Preload key fonts

- Subset fonts (only needed characters)

INP (Interaction to Next Paint)

What it measures: How quickly page responds to user interactions (clicks, taps, keyboard input). Slow INP frustrates users trying to interact with your page. Replaced FID (First Input Delay) in 2024: INP provides more comprehensive interactivity measurement. Thresholds:

- Good: ≤ 200 milliseconds

- Needs Improvement: 200 - 500 milliseconds

- Poor: > 500 milliseconds How to improve INP:

- Minimize JavaScript execution:

- Break up long tasks

- Defer non-essential JavaScript

- Use code splitting

- Remove unused JavaScript

- Optimize event handlers:

- Debounce input handlers

- Use passive event listeners

- Minimize work in handlers

- Reduce main thread work:

- Move processing to Web Workers

- Optimize complex calculations

- Avoid layout thrashing

- Improve server response:

- Faster API responses

- Cache where appropriate

CLS (Cumulative Layout Shift)

What it measures: Visual stability—how much content shifts around unexpectedly while loading. What causes layout shifts:

- Images without dimensions

- Ads, embeds, iframes without reserved space

- Fonts loading and causing text reflow

- Dynamically injected content

- CSS animations triggering layout changes Thresholds:

- Good: ≤ 0.1

- Needs Improvement: 0.1 - 0.25

- Poor: > 0.25 How to improve CLS:

- Set dimensions on images and videos:

- Always include width and height attributes

- CSS aspect-ratio for responsive images

- Reserve space for ads and embeds:

- Set minimum height for ad slots

- Use placeholder for embeds

- Avoid inserting content above existing content:

- Don't inject content that pushes existing content down

- Reserve space for dynamic content

- Optimize font loading:

- Use font-display: optional or swap

- Preload fonts

- Match fallback font metrics

- Avoid animations that trigger layout:

- Use transform and opacity (don't trigger layout)

- Avoid animating width, height, margin, padding [Diagram: Visual explanation of LCP, INP, and CLS with illustrations]

Reading Your Core Web Vitals Data

Good, Needs Improvement, Poor Buckets

GSC categorizes URLs into three buckets for each vital:

- Good: Meets threshold for good user experience

- Needs Improvement: Marginal, should improve

- Poor: Below acceptable threshold URL classification:

- A URL is considered "Poor" if even one vital is poor

- Shows in "Good" only if ALL vitals are good

- "Needs Improvement" if some vitals need improvement but none are poor

Mobile vs Desktop

Core Web Vitals report separates mobile and desktop performance. Why the split:

- Mobile typically performs worse

- Google uses mobile version for ranking

- Users on mobile have different expectations Focus priority:

- Fix mobile first

- Ensure mobile meets "Good" thresholds

- Then address desktop if needed Common patterns:

- Mobile shows issues, desktop is fine → Optimize for mobile specifically

- Both show issues → Fundamental performance problems

- Desktop shows issues, mobile is fine → Unusual, investigate desktop-specific assets

Field Data vs Lab Data

Field data (Real User Monitoring - RUM):

- Actual performance experienced by real users

- Collected from Chrome users who opted in to usage statistics

- What GSC shows

- Reflects real-world conditions

- This is what Google uses for rankings Lab data (Synthetic testing):

- Simulated testing in controlled environment

- Tools: PageSpeed Insights, Lighthouse, WebPageTest

- Consistent testing conditions

- Good for development and debugging

- Not used for rankings, but helpful for finding issues Why they differ:

- Field data varies by actual user conditions

- Lab data uses standard device and network

- Field data may include outliers (very slow devices) Use both:

- Field data (GSC) to understand real user experience

- Lab data (PageSpeed Insights) to diagnose and fix issues

URL-Level vs Aggregate Data

Aggregate view:

- Shows total count of URLs in each bucket

- Trend over time

- Overall site health snapshot URL-level view:

- Specific URLs with issues

- Grouped by similar issues

- Prioritization data How to use:

- Start with aggregate to understand overall status

- Drill into "Poor" URLs

- Review grouped URLs (similar issues)

- Fix issues on example URLs

- Fixes propagate to similar URLs

- Track improvement over time [Screenshot: Core Web Vitals dashboard showing mobile and desktop] [Chart: Threshold breakdown showing good/needs improvement/poor ranges]

Prioritizing Core Web Vitals Fixes

Not all CWV issues impact your rankings equally. High priority:

- Pages with significant traffic in "Poor" status

- Commercial pages

- Mobile issues

- LCP and CLS Medium priority:

- Pages with moderate traffic in "Needs Improvement"

- Blog and informational content

- Desktop issues

- INP improvements Lower priority:

- Low-traffic pages

- Pages passing all vitals but could improve further Impact on rankings: Realistic expectations:

- Core Web Vitals are a ranking signal, but not the strongest

- Content relevance and links matter more

- CWV acts as a tiebreaker between similar pages

- Poor CWV can hold you back from top positions

- Good CWV alone won't make poor content rank User experience correlation:

- Faster pages have lower bounce rates

- Better CLS improves engagement

- Good INP increases interaction rates

- UX improvements often matter more than ranking boost Quick wins vs long-term improvements: Quick wins (implement first):

- Set image dimensions (fixes CLS)

- Optimize images (improves LCP)

- Defer offscreen JavaScript

- Preload critical resources (improves LCP)

- Use font-display: swap Long-term improvements (bigger effort):

- Upgrade hosting/server

- Implement comprehensive caching strategy

- Refactor JavaScript architecture

- Migrate to faster framework

- Implement edge caching with CDN When to hire a developer:

- Complex JavaScript issues causing INP problems

- Server-side optimizations needed

- Architectural changes required

- Multiple vitals in "Poor" after attempting fixes

- Fixes require code refactoring [Screenshot: URL details showing specific Core Web Vitals issues] [Link to: GSC Core Web Vitals Report Interpretation Guide (Cluster #19)]

Mobile Usability Report

With mobile-first indexing, Google primarily uses your mobile page version for ranking. The Mobile Usability Report identifies issues that prevent good mobile experience. To learn how to prioritize and fix these issues systematically, read our guide on mobile usability issues and how to prioritize fixes.

Common Mobile Issues

Text Too Small to Read

The problem: Font size is too small for mobile screens, forcing users to zoom in. Threshold: Text smaller than 12px is flagged How to fix:

- Set minimum font size to 16px for body text

- Use relative units (em, rem) instead of px

- Ensure font scales appropriately across devices

- Test on actual mobile devices CSS example:

body {

font-size: 16px; /* Minimum for readability */

}

Clickable Elements Too Close Together

The problem: Links, buttons, or other tap targets are too close, causing mis-taps. Threshold: Less than 48px between tap targets How to fix:

- Minimum 48px × 48px for touch targets

- Add padding/margin around buttons and links

- Increase spacing in navigation menus

- Test touch interactions on mobile devices CSS example:

button, a {

min-height: 48px;

padding: 12px 16px;

margin: 4px; /* Space between elements */

}

Content Wider Than Screen

The problem: Content extends beyond viewport width, requiring horizontal scrolling. Common causes:

- Fixed-width elements

- Images without max-width

- Tables without responsive styling

- iframe embeds with fixed width How to fix:

- Use responsive width units (%, vw, rem)

- Set

max-width: 100%on images - Make tables scrollable or stack on mobile

- Use responsive embed containers CSS example:

img {

max-width: 100%;

height: auto;

}

.container {

max-width: 100%;

overflow-x: hidden;

}

Viewport Not Set

The problem: Missing or incorrect viewport meta tag causes mobile rendering issues. What viewport tag does:

- Tells browser how to scale page on mobile devices

- Essential for responsive design

- Without it, mobile browsers render at desktop width then scale down

How to fix:

Add viewport meta tag to

<head>:

<meta name="viewport" content="width=device-width, initial-scale=1">

Common mistakes:

maximum-scale=1, user-scalable=no- Wrong syntax or typos

- Missing entirely [Screenshot: Mobile Usability report overview]

Testing and Fixing Mobile Issues

Mobile-Friendly Test Tool

Google provides a dedicated mobile testing tool:

URL: search.google.com/test/mobile-friendly

How to use:

- Enter your URL

- Wait for test to complete

- Review results:

- "Page is mobile-friendly" → ✓ Good

- "Page is not mobile-friendly" → Issues listed

- Fix identified issues

- Retest Advantages:

- Tests any URL (even not in GSC)

- Provides screenshot of how Google renders page

- Shows specific issues found

- No GSC access required

Using Browser Dev Tools

Chrome DevTools provides powerful mobile testing capabilities. How to test:

- Open page in Chrome

- Press F12 (or right-click → Inspect)

- Click device toggle icon (or Ctrl+Shift+M)

- Select device preset or set custom dimensions

- Test touch interactions and scrolling

- Use throttling to simulate slow connections What to test:

- Tap target sizes

- Text readability at actual size (no zooming)

- Horizontal scrolling (shouldn't exist)

- Navigation usability

- Form input experience

- Load time on slow connection (use throttling)

Testing Across Real Devices

Simulator testing is good, but real device testing is essential. Minimum test devices:

- iPhone

- Android phone

- Older Android What to test:

- Touch interactions feel natural

- Text is comfortably readable

- Page loads at acceptable speed

- Forms are easy to complete

- Navigation is intuitive Device cloud services:

- BrowserStack

- Sauce Labs

- LambdaTest

Validation Process

Inspect URL in GSC URL Inspection tool 4. Run live test to confirm mobile-friendly status 5. Click "Validate Fix" in Mobile Usability report 6. Wait for validation (days to weeks) 7. Track validation progress 8. Issues should disappear from report once validated Timeline:

- Fixes go live → Immediate

- Test confirms fix → Minutes

- Google recrawls → Days to weeks

- Validation completes → Weeks

- Report updates → After validation [Screenshot: Mobile usability errors with examples] [Before/after: Mobile issue fixed comparison] [Link to: Mobile Usability Issues: How to Prioritize Fixes (Cluster #20)]

Enhancements: Rich Results and Structured Data {#enhancements-rich-results}

Rich results make your search listings stand out with additional visual elements—ratings, images, FAQs, pricing, and more. The Enhancements section shows which rich results you're eligible for and tracks their performance.

Types of Rich Results

FAQ Schema

What it is: Displays frequently asked questions directly in search results Eligibility requirements:

- Valid FAQ structured data

- Questions and answers format

- At least 2 FAQ items

- Content matches page content Impact:

- Significantly increases SERP real estate

- Can push competitors down

- Improves CTR by 20-40% on average

- Provides immediate value to searchers Example use cases:

- FAQ pages

- Product pages with common questions

- Blog posts answering multiple related questions [Screenshot: FAQ rich results in search results]

HowTo Schema

What it is: Displays step-by-step instructions in search results Eligibility requirements:

- Valid HowTo structured data

- Minimum 2 steps

- Each step has descriptive text

- Steps lead to actionable outcome Impact:

- Appears for "how to" queries

- Shows visual step guide

- Improves visibility for tutorial content

- Can include images for each step Example use cases:

- Tutorial articles

- Recipe instructions

- DIY guides

- Installation instructions

Product Schema

What it is: Displays product information like price, availability, ratings Eligibility requirements:

- Valid Product structured data

- Price and currency

- Availability status

- Product name and image Optional but recommended:

- Aggregate rating (star display)

- Review count

- Brand Impact:

- Shows price and availability in search

- Star ratings improve CTR dramatically (30-50% lift)

- Essential for e-commerce SEO

- Appears in Google Shopping results (free listings) Example use cases:

- Product pages

- E-commerce listings

- Service offerings with pricing

Recipe Schema

What it is: Displays cooking time, ratings, calories for recipes Eligibility requirements:

- Valid Recipe structured data

- Recipe name and image

- Ingredients list

- Instructions Optional but recommended:

- Prep time, cook time

- Nutrition information

- Ratings and reviews

- Recipe category Impact:

- Appears in recipe search features

- Shows in Google Images

- Dedicated recipe carousel

- Critical for food blogs Example use cases:

- Recipe blog posts

- Cooking tutorials

- Food websites

Event Schema

What it is: Displays event details like date, location, price Eligibility requirements:

- Valid Event structured data

- Event name

- Start date and time

- Location (physical or virtual) Optional:

- End date/time

- Ticket price and availability

- Organizer information

- Image Impact:

- Appears in event searches

- Shows in Google Events experience

- Local discovery

- Important for event promotion Example use cases:

- Conference pages

- Concert and show listings

- Webinar announcements

- Local event pages

Review Schema

What it is: Displays ratings and review information Eligibility requirements:

- Valid Review structured data

- Item being reviewed

- Rating value

- Author name Google's restrictions:

- Can't be self-reviews (your own business)

- Must be independent reviews

- Editorial reviews only Impact:

- Star ratings in search results

- Increases CTR significantly

- Trust signals

- Competitive advantage Example use cases:

- Product reviews

- Service reviews

- Book/movie reviews

- Software comparisons [Examples: Different rich result types in SERPs]

Monitoring Rich Result Performance

The Enhancements section tracks rich results separately. What you can see: Per enhancement type:

- Total impressions for pages with this enhancement

- Total clicks

- CTR

- Valid pages count

- Error pages count

- Warning pages count URL-level details:

- Which specific pages have this enhancement

- Issues preventing rich results

- Validation status

- Examples of detected structured data How to analyze: Compare CTR with and without rich results:

- Note CTR for pages with FAQ schema

- Compare to similar pages without schema

- Calculate CTR lift from implementation

- Use for ROI calculation of schema efforts Track rich result impressions:

- Monitor trend over time

- Increasing impressions = more rich result appearances

- Decreasing = potential issues or algorithm changes Identify errors:

- Review error and warning counts

- Click into specific errors

- See which pages affected

- Fix structured data issues

- Validate fixes Common errors: FAQ schema:

- Duplicate questions

- Question doesn't have answer

- Answer too short

- Invalid markup syntax Product schema:

- Missing price or availability

- Invalid price format

- Missing required properties

- Review schema issues (if included) HowTo schema:

- Too few steps (minimum 2)

- Steps don't form coherent instruction

- Missing step descriptions

- Invalid image URLs Recipe schema:

- Missing required ingredients or steps

- Invalid time format

- Nutrition values in wrong format [Screenshot: Enhancements section overview showing different types] [Screenshot: FAQ rich results performance data]

Testing Structured Data

Before deployment and after fixes, test your structured data: Rich Results Test:

- URL:

search.google.com/test/rich-results - Tests if page is eligible for rich results

- Shows detected structured data

- Identifies errors and warnings

- Provides code view of detected schema Schema Markup Validator:

- URL:

validator.schema.org - Validates schema syntax

- Comprehensive error detection

- Supports all schema types

- Technical validation Testing workflow:

- Add structured data to page

- Test with Rich Results Test

- Fix any errors shown

- Validate with Schema Validator

- Deploy to live site

- Inspect URL in GSC

- Check if structured data detected

- Request indexing

- Monitor Enhancements report for appearance Timeline for rich results to appear:

- Structured data deployed → Immediate

- Google detects (inspect URL) → Hours to days

- Appears in Enhancements report → Days to week

- Shows in actual search results → Days to weeks (not guaranteed)

- Performance data appears → After rich results show in search

- Query relevance

- Schema quality and accuracy

- Content quality

- User intent

- SERP composition

- Testing and algorithmic factors [Link to: Search Appearance in GSC: Understanding Rich Results Impact (Cluster #11)]

Security & Manual Actions {#security-manual-actions}

The Security and Manual Actions sections alert you to serious issues that can remove your site from search results entirely.

Security Issues

Google monitors sites for security problems that could harm searchers. Types of security issues:

Hacked Content

What it is: Your site was compromised and now contains injected content Common hacking types:

- Japanese keyword hack

- Pharmaceutical hack (spam about pills)

- Cloaking hack

- Redirect hack How you'll know:

- Security Issues panel shows alert

- Email notification (if enabled)

- Search results may show "This site may be hacked" warning

- Significant drop in traffic How to fix:

- Identify how site was hacked

- Clean up hacked content

- Update all software, plugins, themes

- Change all passwords

- Review file permissions

- Implement security measures

- Request review in GSC

Malware

What it is: Your site attempts to install malicious software on visitors' devices Signs:

- Browser warnings on your site

- "This site ahead contains malware" message

- Sudden traffic drop

- Security alert in GSC How to fix:

- Scan entire website for malware

- Remove malicious code

- Identify entry point (how malware was installed)

- Update security measures

- Scan server environment

- Request review in GSC

Phishing

What it is: Your site attempts to trick users into providing sensitive information Examples:

- Fake login pages

- Impersonating another website

- Social engineering attacks How to fix:

- Remove all phishing content

- Identify how content was added (hacked vs intentional)

- Ensure no legitimate pages could be misconstrued as phishing

- Request review in GSC Prevention tactics: Basic security:

- Keep all software updated

- Use strong, unique passwords

- Enable two-factor authentication

- Use secure hosting

- Implement HTTPS

- Regular security audits Advanced security:

- Web Application Firewall (WAF)

- Security monitoring service

- Regular malware scanning

- File integrity monitoring

- Limited file permissions

- Disable file editing in CMS admin [Screenshot: Security Issues panel showing clean status]

Manual Actions

Manual actions are penalties applied by human reviewers at Google for violating Google's Webmaster Guidelines. What triggers manual actions:

Thin Content with Little or No Value

Examples:

- Auto-generated content

- Scraped content from other sites

- Doorway pages

- Pages with little useful content Impact: Specific pages or entire site demoted in rankings

User-Generated Spam

Examples:

- Spammy forum posts

- Comment spam

- Spammy user profiles Impact: Affected sections demoted How to fix: Moderate user content, remove spam, implement better spam filtering

Unnatural Links to Your Site

Examples:

- Purchased links

- Link schemes

- Low-quality directory links

- Spam blog links Impact: Entire site or specific pages demoted How to fix:

- Review backlink profile

- Identify unnatural links

- Contact sites to remove links

- Disavow links you can't remove

- Document cleanup efforts

- Submit reconsideration request

Unnatural Links from Your Site

Examples:

- Selling links

- Linking to link schemes

- Excessive link exchanges Impact: PageRank stopped from flowing through these links How to fix:

- Remove or nofollow unnatural outbound links

- Stop link schemes

- Submit reconsideration request

Cloaking or Sneaky Redirects

Examples:

- Showing different content to Google vs users

- Redirecting users to different page than Googlebot sees

- Mobile cloaking Impact: Page or site removed from index How to fix:

- Remove cloaking

- Ensure same content shown to all users and Google

- Fix redirects to be user-focused

- Request reconsideration

Pure Spam

Examples:

- Automatically generated gibberish

- Scraper sites

- Sites dedicated to spam Impact: Site removed from search index How to fix: Completely rebuild site with genuine, quality content [Screenshot: Manual Actions panel showing clean status] [Example: Manual action notification - anonymized]

How to Resolve and Request Reconsideration

Fix all violations:**

- Remove/fix all violating content

- Document what you changed

- Ensure underlying practices changed 4. Submit reconsideration request:

- In Manual Actions panel, click "Request Review"

- Explain what you found

- Describe how you fixed it

- Provide evidence of changes

- Be honest and thorough 5. Wait for review:

- Typically 1-3 weeks

- Could be faster or slower

- Don't resubmit immediately if denied 6. If request is denied:

- Read the denial explanation

- Identify what you missed

- Fix additional issues

- Submit new request Timeline:

- Fix issues → As long as it takes

- Submit request → Same day after fixes complete

- Google reviews → 1-3 weeks typically

- Manual action lifted → Immediately upon approval

- Rankings recover → Days to weeks after lift

Links Report: Understanding Your Backlink Profile {#links-report}

Links remain a top ranking factors. The Links report shows your backlink profile and internal linking structure.

External Links Analysis

What GSC shows:

Top Linking Sites

Sorted by number of links from each domain:

- Which domains link to you most

- Link count from each domain

- Example target URLs How to use: